Recently, a financial client submitted polling questions to me for a given session. Among the questions they wanted to pose to the audience was one regarding insolvency resolution. They wanted attendees to priority rank from a list of three components to identify what were most to least important to their framework. Under this scenario, I convinced my client to use an alternate method to priority ranking.

Priority ranking allows you to rank up to ten selected alternatives. For example, a list of five criteria can be offered and the audience can be asked to rank all five, using a predetermined point system. This point system can be used to equate values ranging from most to least important/useful/ necessary and so on. There’s also the lesser considered and used feature within priority ranking; ranking fewer alternatives than those listed.

By limiting the selections, it also eliminates the tedious mystery of knowing which platform(s) are applicable to each participant. In other words, why make the audience rank 10 criteria if only three or six apply to them? That is why the ‘Other’ answer option is very useful in identifying your audience. And, if the percentage responding to ‘Other’ is significant, you can address those who selected ‘Other’ and ask which platforms they find useful that weren’t listed.

I suggest using prioirity ranking only when listing more than five answer options. To that end, ask yourself if each of those additional answer options, beyond five, are truly useful/necessary for the results, or the data that you’re seeking, and whether they are relevant to your presentation. Then decide how many of those options you would like the audience you prioritize.

Your next step is determining your point system. This feature I’ve found to be too often neglected by clients, presenters, planners and the like. Their focus is on engaging the audience through their feedback. However, this neglect also affects the value and trueness of the data, which can also reflect the quality of the presentation itself.

For example, you want to have participants rank a question and you offer, say, 10 criteria from which to rank them. Now ask yourself:

-From those 10, how many do you let the participants rank? All of them? Their top five? Their top three?

-Once that’s established, what point ranking do you use? 10-9-8-7-6-5-4-3-2-1? 10-7-5-3-1? 10-7-2?

Below is an example of the difference between using preset and custom point systems. The question asks to rank your top five. The data reflects the identical number of keypads responding and the ranking order of each keypad’s five selections.

Notice the results between the preset and custom point systems assigned. By stretching your point values, you better identify what keys are of greater priority.

Now, let’s play Devil’s Advocate; what if you or your attendees can’t select one true criteria that they find most important and, instead, have TWO criteria that are equally important to them? How can you apply a descending point system that truly reflects their opinion? After all, we have preferences in life, both personally and professionally. But, there are instances where we can’t prioritize one criteria over another. It can’t be forced upon.

ADVICE: Review the wording of your polling question. If the question leaves room to question it’s validity, it shouldn’t be asked. I often will assist or suggest to a client that we go back to the drawing keyboard.

Back to my client scenario.

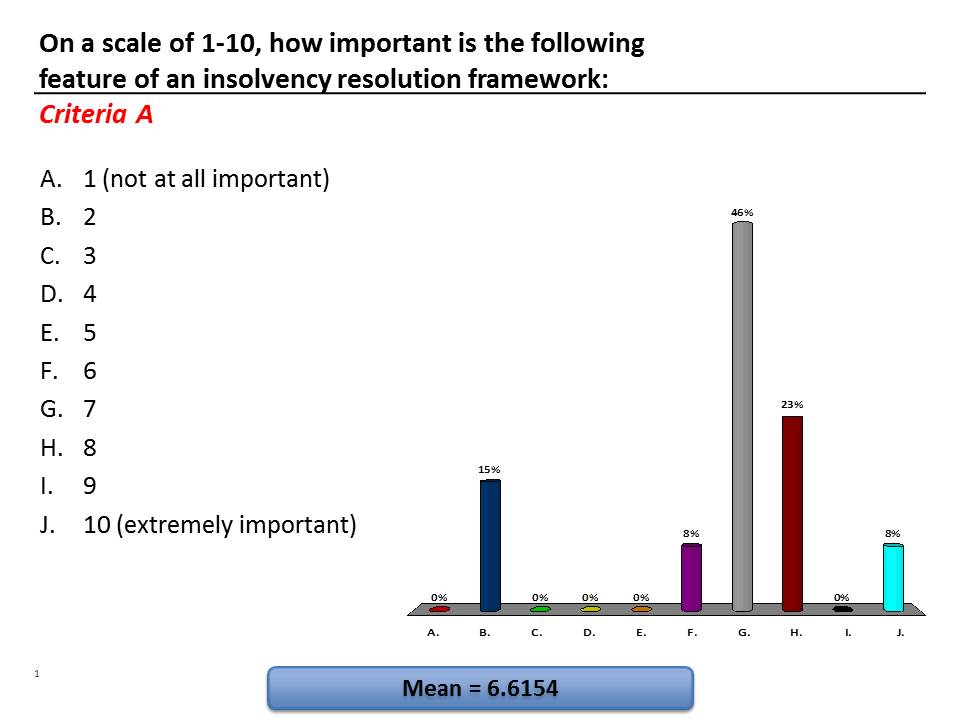

They had only three criteria to consider as options. I asked and they agreed that all three criteria applied to everyone in the audience. I then asked and they also agreed that a preset ranking system narrowed the quality of the results. Enter the solution; Mean score ranking.

Instead of priority ranking the three criteria on one slide, each criteria were voted individually on a scale of 1 to 10. The additional time needed to poll three criteria individually, versus one, was actually pretty minimal. Priority ranking from three or more options into one considerably thought provoking question can be more time consuming than three less thought provoking questions. This allows your audience to focus on one component at a time and vote on their merits individually.

The results were addressed and used as a form to propel the direction of the panel discussion. It also allowed the panel to address Criteria B and C in greater depth based on their scores and their near identical priorities to the audience.

If the presentation doesn’t involve a Q & A with the presenter, using a Likert Scale of answer options can be used to achieve a similar effect. You can use a range from ‘Very Important’ to ‘Not Important At All,’ from ‘Strongly Agree’ to ‘Strongly Disagree,’ etc. With this scale, you can add options such as ‘Does not apply to me’ or ‘I Don’t Know’ to increase participation and better identify your audience.